Facebook Leaked Reports and Algorithms

In September 2021, a Facebook report from 2019 discussing the harmful effects of Instagram on teens was leaked by The Wall Street Journal. In the report, Facebook blatantly acknowledged that Instagram contributes to teens feeling bad about their self-image. However, despite Facebook’s knowledge of this, the company withheld this information and proceeded to neglect the issue.

The leaked report, which contained information from surveys given to Instagram users, disclosed that Instagram worsened body-image issues for 33.33% of female teen respondents. The report went on to explain that Instagram is becoming a source of anxiety and depression for all male and female users across all age groups. An additional statistic from the report stated that when asked if Instagram prompted suicidal thoughts, 19% of respondents reported yes, and over 40% of respondents said Instagram made them feel “unattractive.”

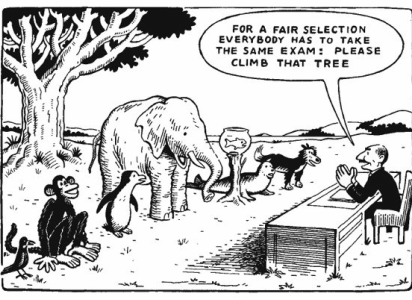

The question of why adolescent Instagram users are developing mental health issues comes down to one reason: algorithms. Sahar Maisha (‘22), a student in the Software Engineering Major, explained what algorithms are: “At the highest level, algorithms are designed using data sets, and setting formulas to use those data sets. The obvious problem is potential bias. For example, if we’re on social media, and we like a post, that post is added to a data set of posts that Instagram uses to find posts similar to show up on your explore page.”

Queen Carrasco (‘22), another Software Engineering Major, added: “Algorithms are operations that sort posts based on publication time, user interests, user location, etc. The more engagement (likes, comments, shares) a post receives, the more likely it is to be favored by the algorithm.”

In The Wall Street Journal’s report on Facebook’s leaked information, it was suspected that the algorithms being used on Instagram actually started showing users more of what they did not like because of a malfunction and improper development with the algorithm itself, which thus worsened their mental health.

Maisha offers a solution to this: “Something that I believe is a great addition, which some websites already do, is ask for your preferences of what you want to see in your feed. For example, after setting up a Pinterest profile, it asks you to label which categories you would like to see more of on your feed to best suit your interests. Maybe more mainstream social media, like Instagram and Facebook, can add a feature that continuously reassures users and asks ‘Do you want to see more posts like this?’ Just prompting that question will pivot us towards less bias, and more control over what we want to see on the internet.”

It is necessary that social media users understand how they can change what they see on their feeds so they don’t experience feedback loops in the algorithm. “When it comes to mental health, I am ultimately doubtful that social media companies such as Facebook will change their algorithms. The addictive nature of social media is what allows so many companies to expand their algorithms. A more health-conscious algorithm would result in a decrease in profit made. That is not to say that there will not be some change. Algorithms might be dictated by the user; built solely on the choices of the individual and not the shared common interest of those around them,” Carrasco noted.

Maisha added, “A lot of teens, including myself, can get stuck comparing ourselves, bodies, and lives to others that we see online. We spend a lot of time on their posts, that Instagram assumes we’re admiring, when really, it’s hurting us. Algorithms are black-and-white, so to algorithms, more time on a single post equals more posts you want to see. They can’t read your emotions and feelings, unfortunately.”

Over 40% of Instagram’s users are under the age of 22. It is clear that social media’s impact on this new generation of users is extensive, and that a discussion on its impacts on users’ mental health is needed. Maisha commented, “Thankfully, mental health has become a more mainstream issue now than it was 10 years ago. If you ask students 10 years ago about what mental health mediums and spaces are available to them, they wouldn’t have been able to name a single one. Now with the growing awareness for mental health, a lot of tech companies incorporate it — in applications, and on the job. Even at Tech, there are resources like our guidance counselors, cool down rooms, and other advisors that can help if social media is negatively affecting your mental health.”

Dounia Ouzidane (she/her) is a co-editor for STEM and manager of The Survey’s social media team. Dounia...